Taiwan AI Safety Challenge (TAISC)

in

conjunction with IEEE AVSS 2025

Taiwan AI Safety Challenge (TAISC)

in

conjunction with IEEE AVSS 2025

Introduction

The Taiwan AI Safety Challenge is an international competition focused on rapidly addressing road safety issues in Taiwan. Leveraging Vision–Language Models (VLMs), participants will develop solutions for future accident classification from limited dashcam video data. To facilitate advanced model development and efficient fine-tuning on this limited data, limited computing resources utilizing the Phison aiDAPTIV+ hardware platform will be available for eligible participants via an application process; further details on applying will be provided subsequently. This platform is particularly well-suited for training and adapting large VLMs, such as variants like LLaMA 3.2 11B (as an example), enabling rapid prototyping and fine-tuning crucial for this challenge. Participants are encouraged to leverage these resources to demonstrate both quantitative performance and potential real-world applicability.

Short-term Impact: Mobilize global AI talent to tackle Taiwan’s complex road-safety challenges through accurate future accident prediction.

Technical Validation: Demonstrate the effectiveness of VLMs for future accident classification in autonomous driving scenarios.

Global Visibility: Establish Taiwan’s leadership in AI-driven traffic safety research.

Data scarcity: VLMs excel when labeled data are limited.

Societal benefit: Improved accident prediction can save lives.

Efficient Development: The availability of the Phison aiDAPTIV+ platform offers significant advantages for rapidly prototyping and fine-tuning large VLMs, crucial when working with limited datasets.

Research frontiers: Combining large-scale language understanding with video perception.

Task Description

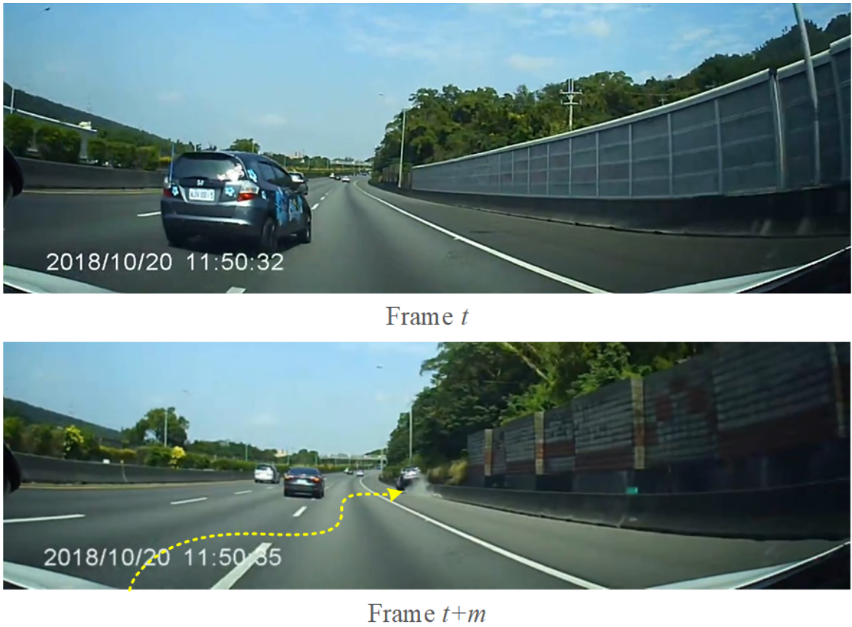

Participants must use a vision–language model (VLM) to predict whether a collision will occur in the second half of a 2‑second dash‑cam video clip, given only the first half of that clip.

Input Data

The first half (1s) of a 2s dash‑cam video segment (same frame rate as the original dataset).

Expected Output

A binary label for the clip:

0 = no accident

1 = accident

Evaluation Metrics

F1‑Score (50 %) — primary metric, balances precision and recall for imbalanced data

Accuracy (30 %)

AUC (20 %)

Videos: 600 dash‑cam clips collected by our team.

Splits: Training 70% / Validation 15% / Test 15% (details on CodeBench).

Reference: Similar to AVA Challenge @ IEEE MIPR 2024.

Vision–Language Model (VLM) required

Every submission must include a VLM component (e.g., CLIP-style dual encoder, Flamingo-style perceiver, video-adapted LLaMA, etc.). The choice of architecture, backbone size, and training strategy is entirely up to each team.

Allowed techniques

Pre-training, fine-tuning, adapter fusion, domain adaptation, knowledge distillation, prompt engineering, or any combination thereof.

Prohibitions

– No manual labeling of test clips.

– No external datasets unless explicitly announced by the organizers.

– Generated data must be clearly documented in the final report.

What it is

A limited pool of cloud GPU hours generously provided by Phison Electronics (群聯電子). These credits are intended to supplement your own compute and lower the entry barrier for teams without large local clusters.

How to apply

Teams may opt-in during registration by submitting a short (≤ 1 page) “compute-need statement”. If demand exceeds supply, slots will be allocated by random draw to keep the process transparent and fair.

Baseline availability, not a mandate

Each aiDAPTIV+ instance ships with a ready-to-run environment featuring Meta LLaMA 3.2-11B plus common video-encoding libs.

You are welcome—but not obliged—to use this model.

Feel free to bring in any other licensed checkpoints or custom VLMs, so long as they fit within the provided GPU quota.

Usage limits

Daily GPU-hour caps and detailed platform guidelines will be published before the competition start. Teams remain responsible for monitoring usage and ensuring reproducibility of their experiments.

Classification: F1‑Score 50%, Accuracy 30%, AUC 20% (F1 is primary).

Promotion (1 month): 5/12–6/12 – announcement, sample data release, code & environment setup.

Competition (2 months): 5/12–7/13 – registration, model development, submissions, Q&A.

Review & Awards (1 month): 7/20–8/1 – top 15 solutions validation, report & code submission; awards on 8/13 at AVSS 2025.

The total prize pool for the Future Accident Classification task is TWD 300,000. Awards for the single track:

1st place: TWD 150,000 + certificate

2nd place: TWD 100,000 + certificate

3rd place: TWD 50,000 + certificate

Lead:

[ E-mail ] Prof. Jun‑Wei Hsieh (National Yang Ming Chiao Tung University, Taiwan)

[ E-mail ] Prof. Chih‑Chung Hsu (National Yang Ming Chiao Tung University, Taiwan)

Co‑organizers:

Prof. Li‑Wei Kang (National Taiwan Normal University, Taiwan)

Prof. Yu‑Chen Lin (Feng Chia University, Taiwan)

Prof. Yi‑Ting Chen (Tatung University, Taiwan)

Prof. Chia‑Chi Tsai (National Cheng Kung University, Taiwan)

Prof. Ming‑Der Shieh (National Cheng Kung University, Taiwan)

Prof. Wen‑Yu Su (Fun AI / Program the World)

President Chuan‑Yu Chang (National Yunlin University of Science and Technology, Taiwan)

Phison Electronics (Taiwan)

College of Artificial Intelligence (National Yang Ming Chiao Tung University, Taiwan)

Supported by:

National Science and Technology Council (NSTC)