Video Super-Resolution Project

|

1Chih-Chun Hsu, 1Chia-Wen Lin, and Li-Wei Kang |

1Department of Electrical Engineering National Tsing Hua University Hsinchu 30013, Taiwan |

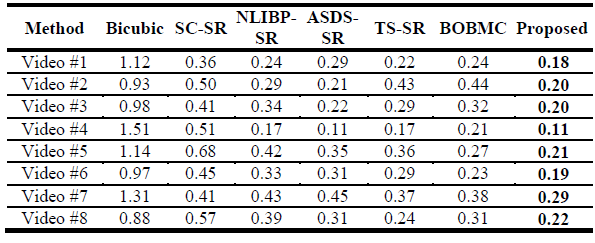

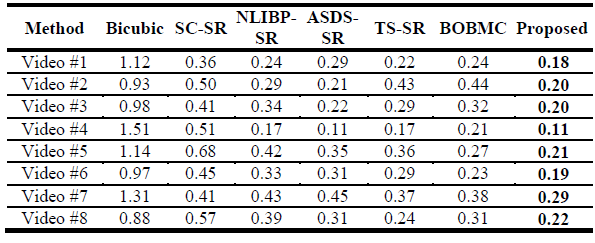

To quantitatively evaluate the performances of various SR schemes, we use the motion-based video integrity evaluation (MOVIE) metric proposed in [27] for videoquality assessment. The MOVIE metric is a full-reference quality assessment metric which utilizes a general, spatio-spectrally localized multi-scale framework for evaluating dynamic video fidelity that integrates both spatial and temporal (and spatio-temporal) aspects of distortion assessment. The smaller the MOVIE index of an evaluated video is, the higher the visual quality of this video will be. MOVIE has proven to be reasonably consistent with human subjective judgments. Since it takes into account the temporal distortion, the MOVIE metric is much more suitable for evaluating the fidelity of an upscaled video with dynamic textures compared to other spatial quality assessment metrics which do not consider temporal information [e.g., the peak signal-to-noise ratio (PSNR) metric and the structure similarity (SSIM) metric, and their variants]

Table 1: Objective Evaluation by MOVIE Index for the Reconstructed SR Videos Obtained Using the Bicubic [3], SC-SR [9], NLIBP-SR [6], ASDS-SR [11], TS-SR [26], BOBMC [17], and the Proposed Method (Smaller MOVIE Value Indicates Higher Visual Quality).

Table 1 compares the objective MOVIE indices of the SR results for the four test videos using Bicubic, SC-SR, NLIBP-SR, ASDS-SR, TS-SR, BOBMC [17], and the proposed method. To fairly compare our method with BOBMC, we apply TS-SR to upscale each LR key-frame, and then use BOBMC to upscale non-key-frames because in [17], each HR key-frame was assumed to be always available. Table 2.2 shows that the proposed method outperforms these compared methods in terms of MOVIE based on the fact that our method can well maintain the temporal consistency for consecutive upscaled video frames.

References

[9]

J. Yang, J. Wright, T. Huang, and Y. Ma, “Image super-resolution via sparse

representation,” IEEE Trans. Image

Process., vol. 19,

no. 11, pp. 2861–2873,

Nov. 2010.

[11]

W. Dong, L. Zhang, G. Shi, and X. Wu, “Image deblurring and super-resolution by

adaptive sparse domain selection and adaptive regularization,”

IEEE Trans. Image Process., vol. 20,

no. 7, pp. 1838−1857,

July 2011.

[17]

B. C. Song, S. C. Jeong, and Y. Choi, “Video

super-resolution algorithm using bi-directional overlapped block motion

compensation and on-the-fly dictionary training,”

IEEE Trans.

Circuits Syst. Video Technol.,

vol. 21, no. 3, pp.274−285,

Mar. 2011.

[26]

Y. HaCohen, R. Fattal, and D. Lischinski, “Image upsampling via texture

hallucination,” in Proc.

IEEE Int.

Conf.

Comput. Photography,

Cambridge,

MA,

USA, pp. 20−30,

Mar.

2010.

[27]

K. Seshadrinathan and A. C. Bovik, “Motion tuned

spatio-temporal quality assessment of natural videos,”

IEEE Trans.

Image Process.,

vol. 19, no. 2, pp. 335−350,

Feb. 2010.