NTHU Retargeting Image Dataset (NRID)

Our database: 35 test images

Downloads

Image attributes [Sheet1.xlsx] (Microsoft Excel file)

Image database [NRID.zip] (Zipped file)

Saliency maps: [Ground truth (binary)] [Fang's method] [Itti's method]

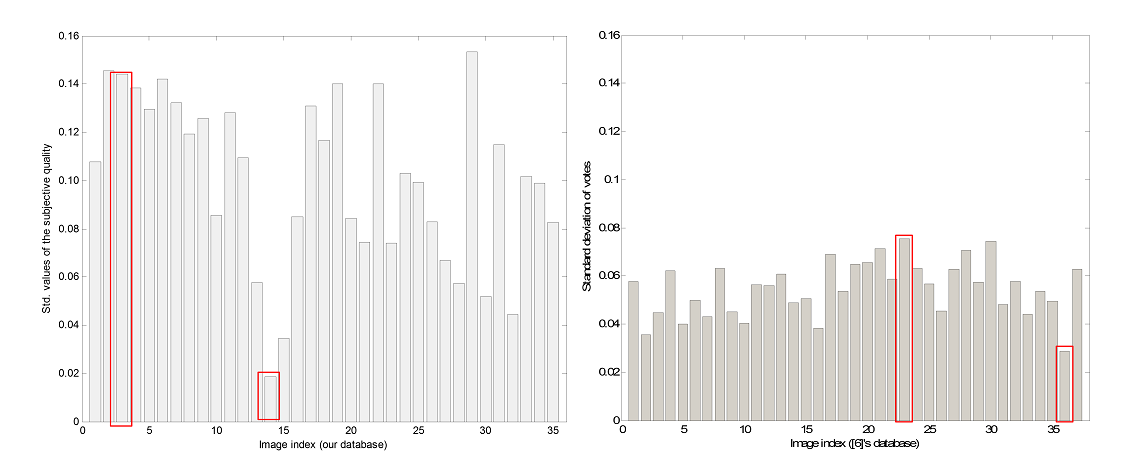

Fig.1. The std. values of the subjective votes for (left) our NRID database and (right) RetargetedMe database.

Higher std. value : more distinguished for user study.

Lower

std. value : diffcult to distinguish.

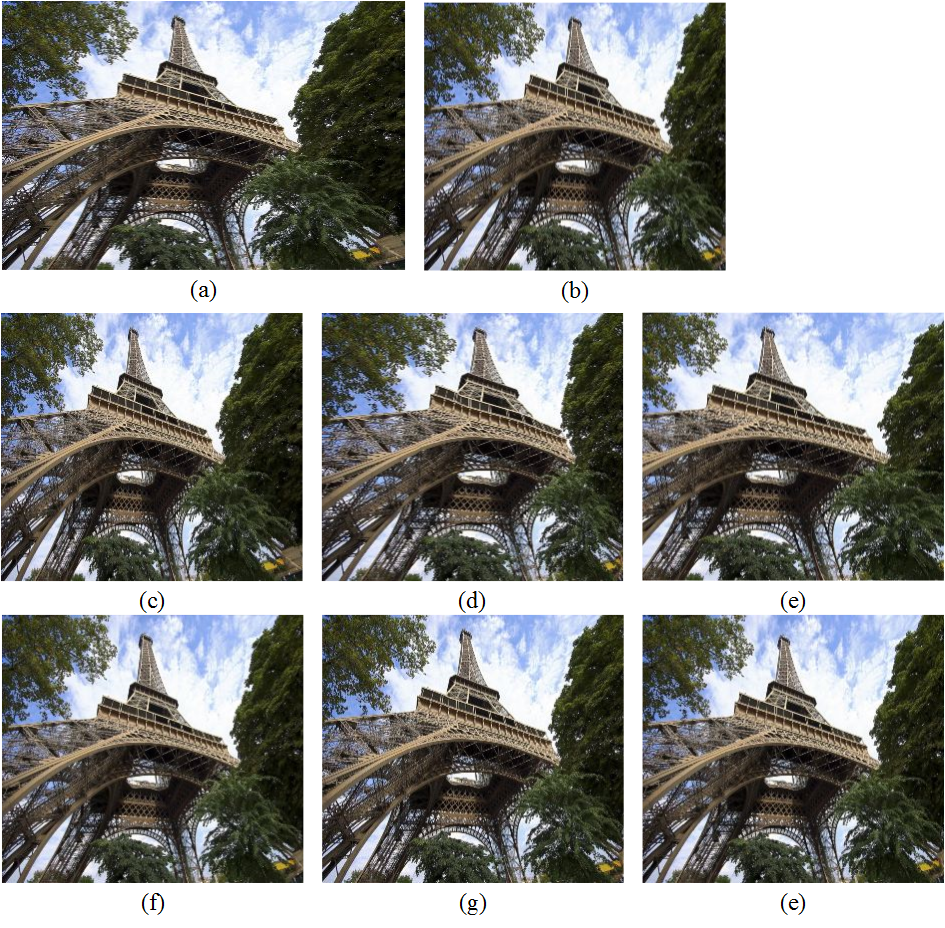

Fig. 2. The test and its retargeted images in RetargetMe database with index 36. (a) Original image and (b)-(h) are the retargeted images using different algorithms.

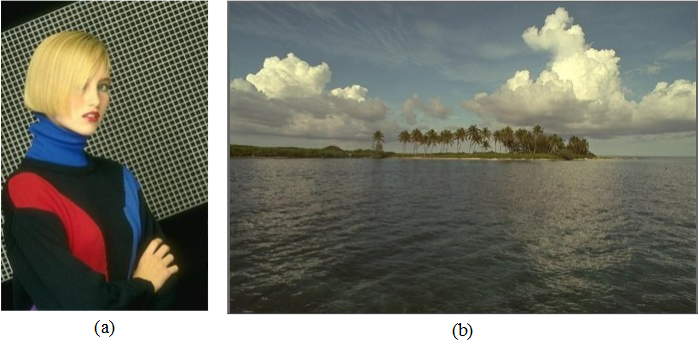

Fig. 3. (a) Test image in NRID with index 14 of Fig. 1 (b). (b) Test image in NRID with index 3 of Fig. 1 (b).

On average, our NRID database has higher std. values, meaning our NRID database is more judgable for users.

Ranking-based database selection

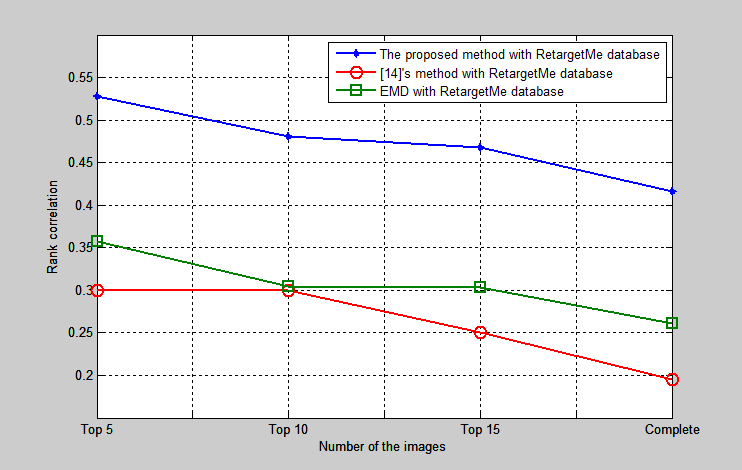

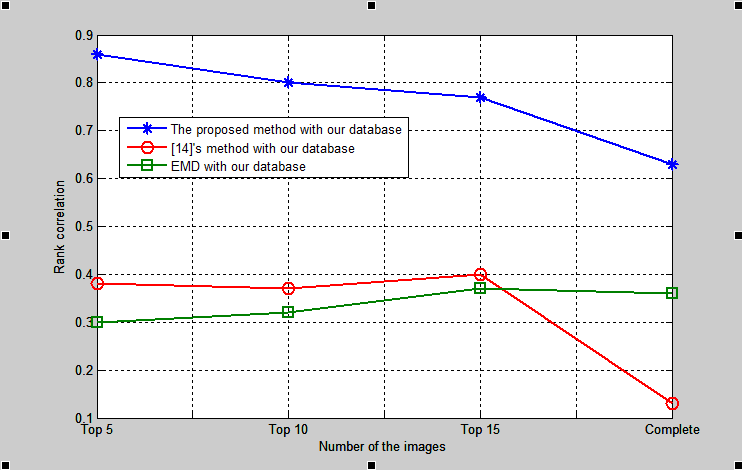

Based on the std. value of the subjective votes, we propose to use these values to select the images with top n highest std. values. As the result shown in Fig. 4 and 5, we found that the images with top 5 and top 10 highest std. values show that their subjective scores are more consistence with the objective scores using the proposed method and other metrics.

Fig. 4. The complete rank correlation of the subjective and objective quality comparison over different selected testing images.

Fig. 5. The complete rank correlation of the subjective and objective quality comparison over different selected testing images.

Fig. 6. The images with top 5 highest std. values in our NRID database.

Fig.7. The images with top 3 lowest std. values in our NRID database.

Higher std. values of subjective votes usually imply a significant saliency object in the image. On the othr hand, it also implys a fact that those retageted ones has visually different from each other so that the users can judge them easily.

Reference

[1]

Y. Fang, W. Lin, Z. Chen, and C.-W. Lin, “Saliency

detection in the compressed domain

for adaptive image retargeting,”

IEEE Trans. Image Process. vol. 21, no. 9, pp. 3888−3901,

Sept. 2012.

[2] L. Itti, “Automatic

foveation for video compression using a neurobiological model of visual

attention,” IEEE

Trans. Image Process., vol. 13,

no. 10, pp. 1304-1318, Oct. 2004.